OSGeodata Discovery: Unterschied zwischen den Versionen

Stefan (Diskussion | Beiträge) (→Geo-Metadata Network Vision) |

Stefan (Diskussion | Beiträge) K (→Geo-Metadata Network Vision) |

||

| Zeile 34: | Zeile 34: | ||

* '''Meta-searchengines''': List of georesources, especially ranked metadata records. Quality measures obvious to boost ranking. Requires web crawling and harvesting protocols, probably no cascaded online query needed. | * '''Meta-searchengines''': List of georesources, especially ranked metadata records. Quality measures obvious to boost ranking. Requires web crawling and harvesting protocols, probably no cascaded online query needed. | ||

* '''Metadata directories/registries''': A (sorted) list of public accessible MD catalogs, including their records. Allows consolidated searching across catalogs/repositories. Harvesters update their indexes. Quality measures possible. Needs registration of catalogs/repositories. Requires harvesting as well as online query protocols. | * '''Metadata directories/registries''': A (sorted) list of public accessible MD catalogs, including their records. Allows consolidated searching across catalogs/repositories. Harvesters update their indexes. Quality measures possible. Needs registration of catalogs/repositories. Requires harvesting as well as online query protocols. | ||

| − | * '''Metadata catalogs (repositories)''':A set of each *owns* metadata about datasets and/or WxS. Typically metadata catalogs include a metadata editor; they are tightly coupled with georesources (aka registration of georesources). Sometimes online cascaded protocols with full metadata content required. | + | * '''Metadata catalogs (repositories)''': A set of each *owns* metadata about datasets and/or WxS. Typically metadata catalogs include a metadata editor; they are tightly coupled with georesources (aka registration of georesources). Sometimes online cascaded protocols with full metadata content required. |

=== Solutions around for webservices and protocols === | === Solutions around for webservices and protocols === | ||

Version vom 1. November 2006, 06:52 Uhr

Inhaltsverzeichnis

Need for geographic metadata exchange

Geoinformation content needs to be published and get disseminated somehow before it is being found by users.

This is a compiled list of WMS servers and layers.

Following are some thoughts to help discovery/search services/brokers and their webcrawlers/harvesters/aggregators to do a better job. So the main question here is: What can content owners do to promote their information? It's mainly registration, declaration and citation.

Geographic catalog (catalog service) or inventory are rather data provider centric names, so we prefer a user centric approach for the management of geodata and geometadata. See also OSGeodata for further discussion.

Before all we need a geographic metadata exchange model. Then we need a geographic metadata exchange protocol.

Keywords: Open access to and dissemination of geographic data (geodata) and information; Metadata; Finding, harvesting or discovery of geodata and web map services; Interoperability; Integration; Service binding; Spatial data infrastructure; Standards.

Vision and Scenario

"We don’t know what people will want to do, or how they will want to do it, but we may know how to allow people to do what they want to do when they want to do it". (ockham.org)

User needs

- End users need search services to discover geographic information.

- Geodata owners need a metadata management tool (which implies an internal metadata model).

- Geodata owners and service providers need (i) a metadata exchange model, (ii) an encoding of it, as well as (iii) a protocol for the exchange, dissemination and sharing of metadata.

Geo-Metadata Network Vision

In the web and internet there are...

- Web accessible datasets.

- Web accessible metadata about datasets and/or WxS (WxS imply pointers to data access points).

- Web accessible datasets and metadata about datasets and/or WxS.

- A lot of other documents, like WCS, etc.

And we have...

- Desktop and Web GIS': Access local datasets, remote datasets and remote WxS through direct launch or "(Remote) Resource open...". Requires a protocol for a) online query to search services and b) remote access to datasets and WxS.

- Meta-searchengines: List of georesources, especially ranked metadata records. Quality measures obvious to boost ranking. Requires web crawling and harvesting protocols, probably no cascaded online query needed.

- Metadata directories/registries: A (sorted) list of public accessible MD catalogs, including their records. Allows consolidated searching across catalogs/repositories. Harvesters update their indexes. Quality measures possible. Needs registration of catalogs/repositories. Requires harvesting as well as online query protocols.

- Metadata catalogs (repositories): A set of each *owns* metadata about datasets and/or WxS. Typically metadata catalogs include a metadata editor; they are tightly coupled with georesources (aka registration of georesources). Sometimes online cascaded protocols with full metadata content required.

Solutions around for webservices and protocols

- For online querying a catalog, a directory/registry or a search engine, some search services like SRU or WFS basic (including with filter) could be a solution. Both protocols include a bbox filter.

- For harvesting, moving full sets of metadata around is needed. Here OAI-PMH with DClite4G and/or WFS basic with DClite4G (without filter) could be a solution. A BBox query is not really a necessessity here as harvesting is done in a pre-processing step. WFS compared to OAI-PMH has version negotiation but lacks a profile for incremental updating and an extension to handle large sets of metadata records (resumptionToken).

- For moving large datasets around, file sharing protocols like Bittorrent could do, which imply an own registry anyway? These lack bbox queries.

- For web crawling http is enough.

Issues:

- How does a user search for remote georesources?

- Is the discovery component (as opposite to an directory) part of a desktop GIS?

- Where resides the searchable geo-metadata?

- For accessing directories, would LDAP be a candidate among SRU or WFS?

- Repository managers should be able to disaggregate and re-aggregate metadata records (e.g. about the same dataset) as required.

A preliminary scenario

- Users search or browse through metadata records. They use a web app. or a search component out of a desktop GIS (remark: Users don't search services per se).

- 'Search service providers' enable the discovery of geodata and 'filter services', like transformation services (Note: WMS is a 'data access service' and belongs to geodata not to filter services)

- 'Search service providers' gather (harvest) their information from 'data/filter service providers' and need a protocol to do this.

- 'Data/filter service providers' offer metadata over this protocol. They typically also implement 'data access services' (WMS, WFS) or they offer 'filter services'.

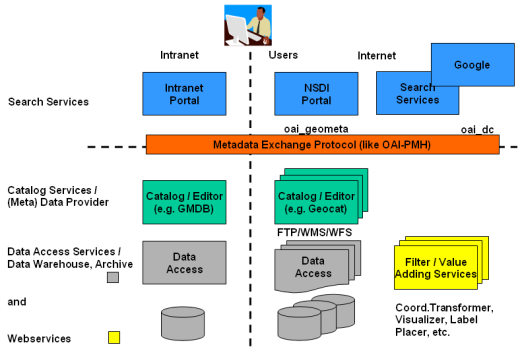

- Diagram: Architectural sketch about the relationships between a 'metadata exchange model' (as part of a metadata exchange protocol, data provider and search tools) and a 'metadata management model' (as part of metadata management and GIS tools).

Possible realization plan

- All: Let's first recognize the uses cases and sort then out (minimal) metadata for exchange and (exhaustive) metadata for own management.

- Service providers and data owners (= all): Let's decide on one quickly first about the metadata exchange model together with an incremental specification process for future adaptations. This model probably borroughs from Dublin Core with spatial and geo-webservice extensions.

- Data owners (and providers) only: Let's decide internal in an organization about the metadata managemet model while adherring to minimal metadata exchange protocols. This model probably borroughs from ISO 19115/119 Core, FGDC and of course the minimal metadata exchange model (Dublin Core).

Discovery of geodata and services

We are aware of two types of 'protocols' both already implemented and ready to go (given an encoded information according to a well specified model):

- Registry

- Autodiscovery

Registry

Action is required from geodata owners (either procative or without indication) to register at service providers (webcrawlers, harvesters). Search services can do better focussed crawling. Examples are OAI-PMH, Google Sitemaps or SOAP.

- Push principle.

- More load on resource owner, less load in indexing and filtering on service providers.

- If registered proactively agreement can be ensured from resource owners.

- Examples: OAI-PMH, RSS pinging search services...

Autodiscovery

Geodata owners publish XML files of geometadata (exchange model) once on the web (=HTTP).

- Pull principle.

- Requires at least one relation - an URL or a tag like a class.

- Uses UI to point to content-rich XML-encoded feed, therefore crosses from human mode to machine mode, in a way that’s easy for both humans and machines.

- Autonomous/independent resource owner; no further action required from him.

- Possible use of links/relations to point to (master) whole collection of resources (including pointers to these, like microformats.org.

- Examples: (X)HTML pages, Extended and XML encoded Dublin Core.

There are some ideas around like embedding hints into the geometadata exchange model for augmented autodiscovery. See also THUMP from IETF which leads to something between 1. and 2.

About search services

Search services include, webbased databases, catalogs and search engines.

A search engine (for geodata discovery) has either a general purpose with data from a brute force search (horizontal, breath first; size is key) or it is based a structured and qualitatively good topical data (depth first, small is beautiful). The former is a market which is lead by Google (so its probably not our business) and does not exclude specialized businesses. The latter is originally a specialized market but does not exlude that it merges into large ones (tourism, etc.). It takes its input e.g. from focused crawling (c.f. Chakrabarti) and/or is built upon partnerships and contains authoritative and reliable sources. Both try to provide access to resources from a single entry point. Google cannot do this because it caters for broad and mainstream user base and covers just about anything on the internet. It is mostly unstructured and not vetted (quality proofed).

Why not just letting crawlers find XML data?

...Or, why a new protocol at all)? Commercial web crawlers are estimated to index only approximately 16% of the total "surface web" and the size of the "deep web" [...] is estimated to be up to 550 times as large as the surface web. These problems are due in part to the extremely large scale of the web. To increase efficiency, a number of techniques have been proposed such as more accurately estimating web page creation and updates and more efficient crawling strategies. [...] All of these approaches stem from the fact that http does not provide semantics to allow web servers to answer questions of the form "what resources do you have?" and "what resources have changed since 2004-12-27?" (from: (Nelson and Van de Sompel et al. 2005)).

How to help discovering resources

Both approaches, registry and autodiscovery, need awareness. So in both cases means to guide webcrawlers to xml-encoded content or services are helpful. What about:

- Icons on top level home pages with links pointing to metadata?

- A 'friend' attribute in a (yet to be re-defined ISO 19115) metadata model as described in some OAI guidelines? Note that tihs attribute is not at the level of an metadata instance/record but at the level of sets (of metadata records).

Distributed Searching vs. Harvesting

Distributed (cross) searching vs. harvesting. There are two possible approaches: (from: Implementing the OAI-PMH - an introduction).

- distributed, cross, parallel searching multiple archives based on protocol, like Z39.50

- harvesting metadata from federated meta-databases into one or more 'central' services – bulk move data to the user-interface

- US digital library experience in this area (e.g. NCSTRL) indicated that cross searching not preferred approach - distributed searching of N nodes is viable, but only for small values of N (< 100). Gives advantages if you don't want to give away your metadata

There are following alternatives to harvesting (remarks: all considered either inappropriate or not established or at least 'painfull' compared to OAI-PMH):

- Distributed searching:

- WFS - the OGC thing - being designed for geographic vector data exchange services

- Z39.50 and SRW - the bibliographic data exchange protocol things (SRW is the more leightweight and 'modern' successor of Z39.50)

- 'Find and bind':

- UDDI - the SOAP thing

Problems with distributed searching: (from: Implementing the OAI-PMH - an introduction)

- Collection description: How do you know which targets to search?

- Query-language problem: Syntax varies and drifts over time between the various nodes.

- Rank-merging problem: How do you meaningfully merge multiple result sets?

- Performance: tends to be limited by slowest target; difficult to build browse interface

Weblinks

Geographic or geospatial information discovery and search services:

- Search / meta search engines:

- geometa.info (still german only for linguistic processing reasons)

- mapdex.org

- ESRI's geography network

- ExploreOurPla.net: Toponym search, WMS Layer, Map Interface

- General: Google Maps, MSN live, Yahoo!

- Catalogs and Registries - machine oriented or experimental:

- owscat: OGC Web Services Catalog by Tom Kralidis

- SOAP service at refractions.net

- Geodiscover at CGDI GeoNetWeb

- GSDI Gateway (service temporarily disabled)

- WMS,WFS,WxS,OWS lists

- Catalog and search software components:

- uDig

- ArcCatalog/ArcGIS